Black boxes in AI: The Debate

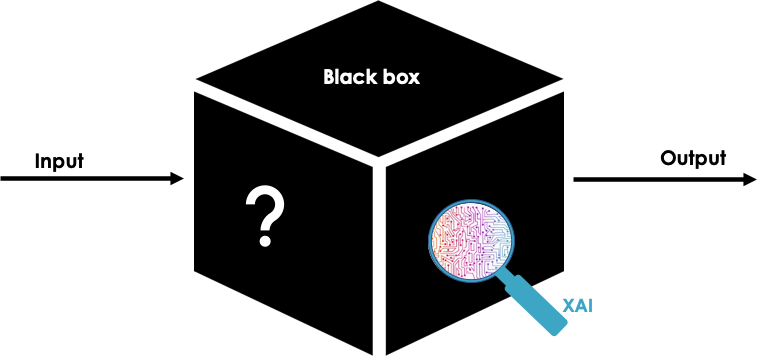

A black box is an Artificial Intelligence (AI) system whose processes and operations are not revealed to its end users. This means that users are not aware of the AI method and the algorithm used, how many layers deep the deep-learning method is, what the trained dataset is, how the AI algorithm works, etc.

As a result, it is not easy to understand why such AI systems make decisions or exhibit certain behaviours. However, the greatest danger arising in AI systems which are not explainable is the possibility that decisions may not be justifiable, since we will not be able to distinguish a good from a bad decision. Moreover, the self-learning capability of the AI black box makes it difficult for the developer to control it.

Because of these difficulties, which arise from the sacrifice of transparency for accuracy and higher performance, various researchers have pinpointed the importance of knowing what the AI machine is learning and why it is learning, so that practitioners can intervene and control it (1). This need for transparency and understandability of AI systems has led to the development of explainable AI (XAI) – AI which can be understood by humans.

Despite the lack of transparency and the trust concerns caused by AI black boxes, companies are still creating them, and there is currently a debate on whether they should. Claims that transparency and explainability will hinder the performance of AI are the most prominent. Sometimes practitioners, when attempting to explain an AI system, might leave out so much information as to make the system less interpretable. Indeed, some simpler AI algorithms are easier to explain (e.g., decision trees), whereas others (such as deep neural networks) are difficult to explain (2), and doing so might hinder their performance. Some practitioners and researchers also claim that explainability is a requirement of those who build the AI but not of the actual end-users (3). They claim that although it is human nature not to trust something that we do not know, or whose workings we cannot understand (4), this should not affect the design of AI systems.

Blackbox in Situ: The Start-Up Experience

TrackApp (5) has developed a Software Development Kit (SDK) that can be embedded in third party mobile applications and using AI it can accurately identify the position of a moving object (i.e. a shopper) in an in-store environment, and trigger actions. This AI was a result of a research commercialisation effort, and the creator of this system developed a black box AI system (which is not explainable). In more detail, this AI applies Machine Learning (ML) to signals received by various transmitters (such as Wi-Fi routers, BLE beacons etc.) to accurately identify users’ in-store position and make recommendations. This service is important, as it is used by TrackApp’s clients to unlock personalised offers in front of specific shelves, to allow the users’ entrance in specific aisles (e.g. alcohol), or in another domain to allow users place sport bets when entering specific store areas.

But the fact that this AI is a non-explainable black box has raised many issues for both TrackApp’s customers, and its internal team. Regarding the first, TrackApp’s clients stated that it is difficult to trust a system that it not transparent and traceable, and since they could not understand how it worked, they were afraid that a false recommendation might impact their company’s reputation with their customers. Also, they questioned whether this particular AI achieved the best accuracy, or whether an alternative would be better. Moreover, although accuracy was considered to be TrackApp’s competitive advantage and the reason why the client preferred their solution over others, the fact that the client could not understand how the recommendations were generated and whether the position of the user is correct led the company to start thinking about alternative and more simple solutions. An added difficulty was that the creator of the systems was not involved in TrackApp anymore, so it was not easy for the rest of the team to understand the AI system and prove that the knowledge and recommendations extracted were correct, and that the system did not have significant gaps. Also, it was difficult for them to understand whether and why the machine might make mistakes. The team was left with trying to construct explanations of the observations between the inputs and the outputs of the system, which was not always efficient. In the end, the start-up re-attracted the creator of the AI system to resolve such issues, and to redesign and improve their service. However, due to using such a black box AI, the project was delayed.

My Thoughts

If I had to give an answer on to “black box” or not to “black box”, I would probably have acted like Pythia, the famous oracle of Delphi in Greece, who always provided ambivalent prophesies. From my point of view, there is no silver bullet; however, in my view it is preferable to promote collaboration and create synergies between AI systems and humans. Even if it is not possible to understand a black box, it is important to create AI systems that have the ability to negotiate with humans. Also, I agree with researchers who pinpoint the need to create Ambient Intelligent (AmI) AI systems, to amplify AI-human collaboration. In such environments, the AI system will interact with humans, receive information, and learn from them and the environment (6). Microsoft MyAnalytics is a good example of such a collaboration, as it uses AI to provide insights to the users about how they spend their time during work, how they are distracted etc. In this case the users might not be aware about how this system works, and it does provide recommendations, but they can intervene in the process and negotiate with the outcome e.g., by defining the working hours, reserve time to focus, receive productivity insights, recommendations etc.

Regarding explainability, indeed there is value in this, and I would recommend using AI algorithms that inherit explainability features. However, explainability is not absolutely necessary for all domains. For example, in the sphere of public health, should we know how an AI system, that uses image recognition to predict COVID-19, works before we are willing to use it? Should we sacrifice its accuracy for its explainability? Similarly, in the TrackApp case, should the system sacrifice the accurate identification of users’ position over explainability? For the client company’s managers, do they actually require knowing how the AI works? What would they gain in this particular example if they did? The system did actually lack XAI features, and the rest of the team did lack knowledge to understand how it works. Is this acceptable? That is the question!

(1) For artificial intelligence to thrive, it must explain itself. (2018). The Economist.

(2) Rai, A. (2020). Explainable AI: from black box to glass box. Journal of the Academy of Marketing Science, 48 (1), 137–141.

(3) Brandão, R., Carbonera, J., de Souza, C., Ferreira, J., Gonçalves, B., & Leitão, C. (2019). Mediation Challenges and Socio-Technical Gaps for Explainable Deep Learning Applications. ArXiv:1907.07178, 1–39.

(4) Miller, T. (2019). Explanation in artificial intelligence : Insights from the social sciences. Artificial Intelligence, 267, 1–38.

(5) This name is fictious to protect the anonymity of the company.

(6) Ramos, C., Juan, P., & Augusto, C. (2008). Ambient Intelligence — the Next Step for Artificial Intelligence. 15–18.

Profiles

Anastasia is a lecturer in Business Information Systems at NUI Galway. Prior to this position she was a Marie Skłodowska-Curie postdoctoral fellow at Lero (The SFI Research Centre for Software), NUI Galway, where she worked on applied business analytics in software development projects with companies like Intel. She has a PhD in Business Analytics from ELTRUN Research Center of the Athens University of Economics and Business. She has long-term experience working on international research and industrial business analytics projects.

Anastasia has worked as a consultant at Intrasoft International SA, ShopTing BE, and ICAP Group SA, and she has made efforts towards research commercialization via establishing two analytics startups. Her research interests lie in the areas of Business Analytics, Artificial Intelligence, Innovation, and Customer Behavior.